This is the fifth entry in a blog post series explaining how to make the most out of your OpenNebula 4.4 cloud. In previous posts we explained the new multiple datastore system with storage load balancing, the enhanced cloud bursting to Amazon features, the multiple groups functionality and the enhanced Amazon API implementation.

The old monitoring system divided the hosts into chunks, and for each monitoring cycle it executed a set of static probes on that chunk of hosts via ssh, collecting the monitoring information (including Virtual Machines running in the hosts). The following monitoring cycle would do the same thing with the next chunk of hosts. As a consequence, when the number of hosts was very large the system took a large amount of time to monitor all the hosts. It was possible to increase the number of monitored hosts per cycle, but beyond a certain amount the system got overloaded with ssh connections.

The new monitoring system uses a radically different approach. Instead of relying on the pull model, where the frontend initiates ssh connections to receive monitoring data, the new system uses a push model, where the hosts actively send the monitoring data to the frontend.

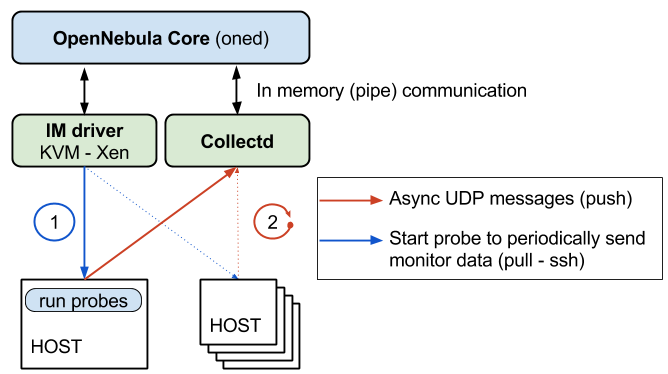

The monitoring process starts the same way, OpenNebula initiates -and bootstraps- the hosts via ssh, but there is a special probe that starts an agent that sits in the background and sends the monitoring data via UDP to the frontend from then onwards. On the frontend side, a C++ multithreaded collector stores all the monitor messages from the hosts and flushes them periodically to oned.

The OpenNebula core realises that it’s receiving data from those hosts and therefore doesn’t try to monitor it again via ssh. If the agent is stopped, OpenNebula will monitor it actively via ssh, which will restart the agent in the host.

With the new system, the monitoring cycle, i.e. how often do the hosts send monitoring data, needs to be set considering the number of hosts and the number of VMs per hosts. So the total VMs/cycle processed by oned does not hit the the database I/O limit.

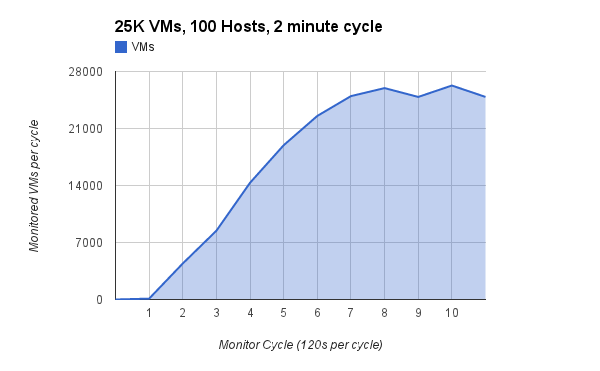

Let’s see this monitoring system in action. In this test we are monitoring 25K Virtual Machines and 100 hosts in cycles of 2 minutes (monitoring_push_cycle = 120).

Yes, you are seeing it correctly, this amazing new system can deliver and process monitoring data for 25,000 Virtual Machines in every 2 minutes. The first part of the figure (cycles 0 to 5) is the bootstrapping of the monitor agents in the hosts. Once all the hosts have the agents up and running sending their UDP messages we get 25K VMs monitored every cicle, i.e. every 2 mins.

This test has been carried out with a Core i5 @ 2.40 GHz with 8GB of RAM running OpenNebula and the database. A dedicated frontend and a database with high-end storage would only improve this test’s performance.

I hope we have conveyed the excitement we feel with this new monitoring system.

Read more in the new OpenNebula 4.4 guides about monitoring.

0 Comments

Trackbacks/Pingbacks